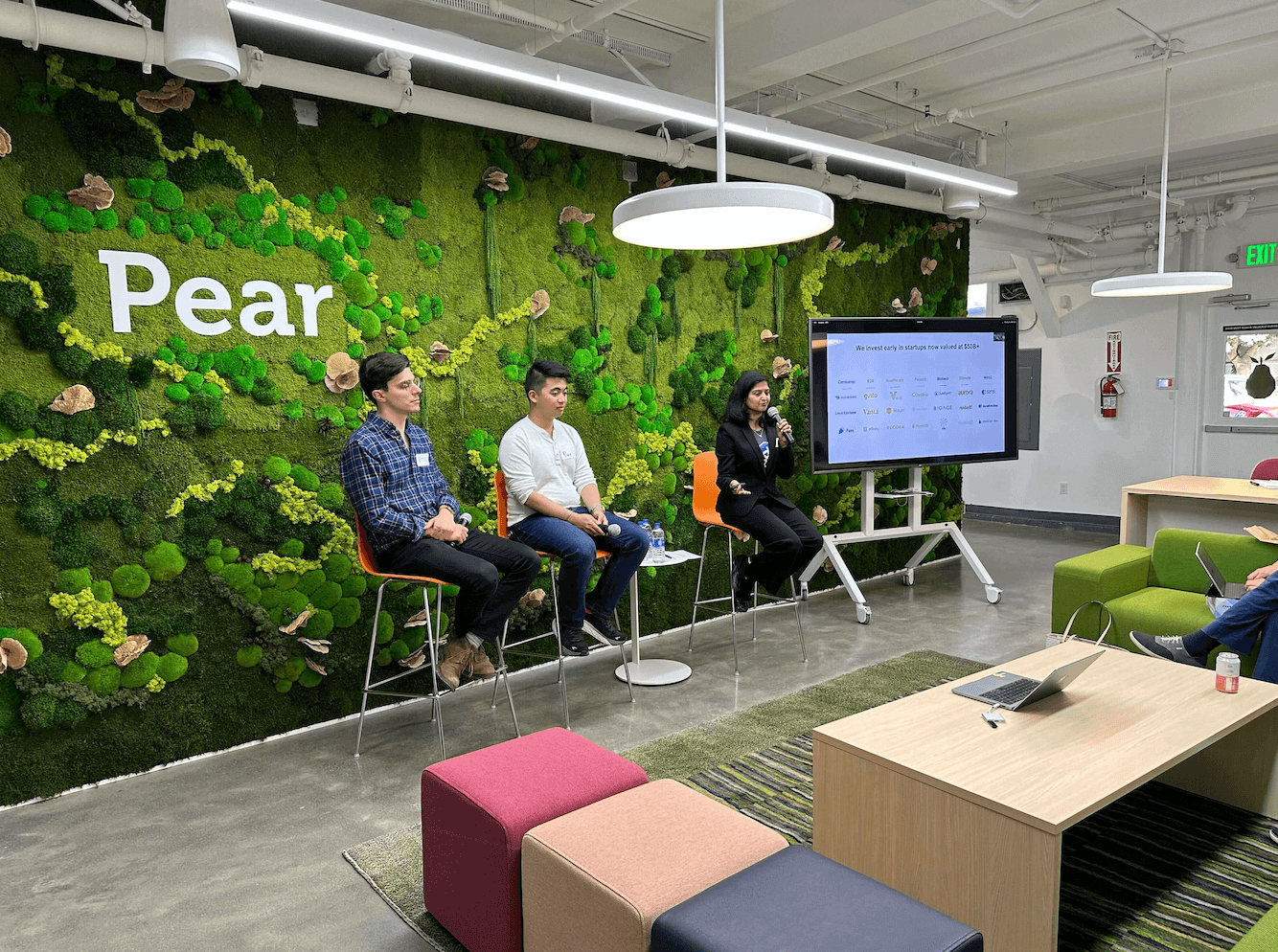

Over the past decade, Pear has spearheaded pre-seed rounds for exceptionally early-stage companies. Beginning of this year, PearX was designed to be a 14 week bootcamp for ambitious founders that has proven to be a breeding ground for category-defining pioneers such as Affinity, Xilis, Capella Space, Nova Credit, Cardless, and Viz.ai.

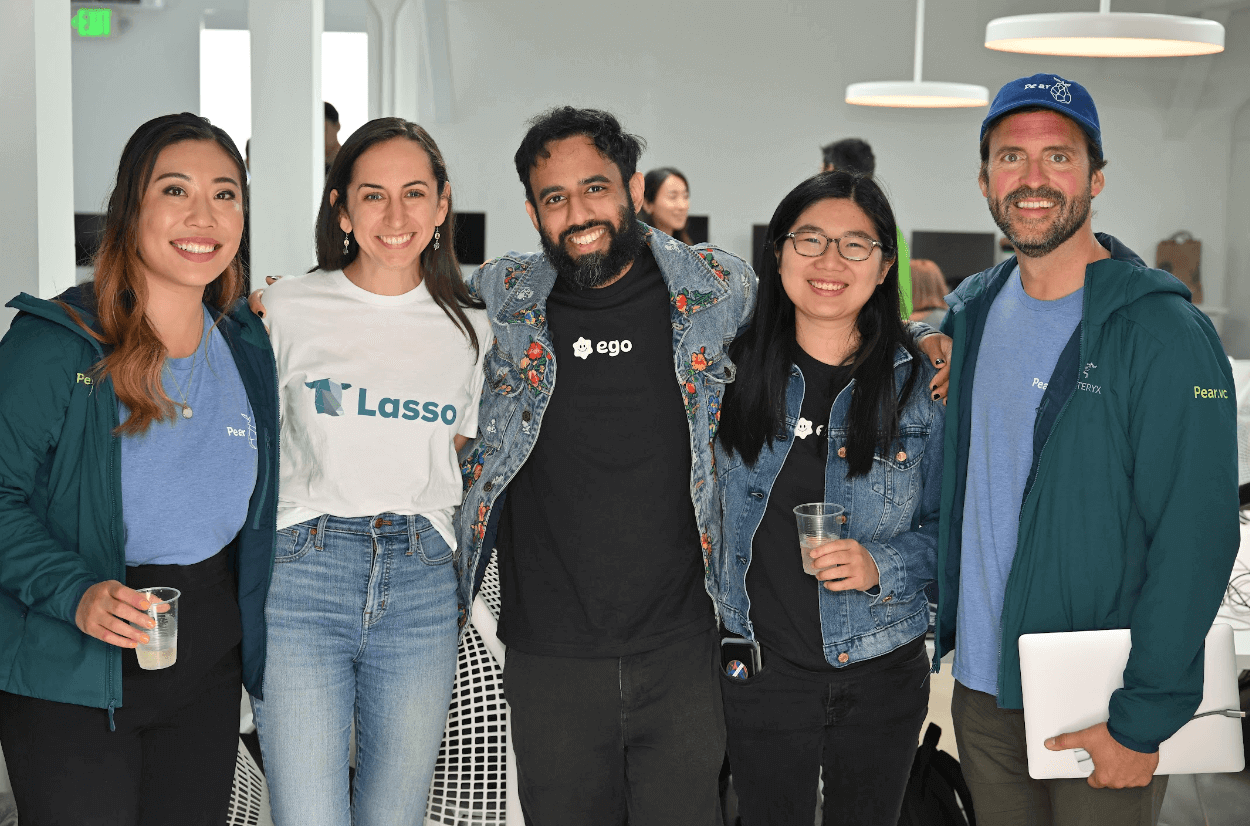

The W23 cohort of PearX was no exception and consisted of thirteen cutting-edge companies pushing the boundaries of AI, healthcare, consumer products, fintech, and climate solutions. Our Demo Day for W23 happened on May 25th and was hugely successful: 1500+ investors tuned into the livestream and we’ve already been able to facilitate more than 650+ investor introductions for the 13 participating companies.

With an acceptance rate of less than 0.25%, these companies have risen to the top among a pool of 4,500 applicants, a testament to their exceptional talent and groundbreaking ideas. Within this cohort, we also have significant female leadership presentation with over 40% of the CTO/CEOs being female (only 9% of venture-backed entrepreneurs are women). We’re proud that PearX W23 is setting a strong example of gender diversity and equity in entrepreneurship.

Within this cohort, we also have significant female leadership presentation with over 40% of the CTO/CEOs being female (much higher than industry average).

So, without further ado, let’s delve into the extraordinary companies that make up the PearX W23 cohort:

AI:

Founders: Henry Weng and Vedant Khanna

Hazel is the AI-powered operating system for realtors that 10x’s their productivity and increases sales. Nearly half of a realtor’s work consists of repetitive backend tasks like middleman communication, document preparation, and project management. Realtors can’t manage backend work on their own as their business scales, so the largest ones operate like SMBs with staff, software, and assistants. Hazel integrates with a realtor’s email, text, and knowledge bases, leveraging AI to parse unstructured data and become their single system of record (and supercharged CRM). From that point, Hazel automates routine tasks using generative AI, freeing realtors to focus on getting more clients instead of more paperwork.

SellScale

Founders: Ishan Sharma and Aakash Adesara

SellScale is an AI-powered Sales Development Representative. SellScale streamlines the end-to-end process of setting demos for salespeople, addressing challenges such as high SDR turnover rates and low email conversion rates. By leveraging AI-generated copy that outperforms human-produced content, SellScale offers scalable and data-driven solutions, allowing sales teams to achieve superior results in their outreach efforts without excessive investments in personnel and tools.

Tare

Founders: Eileen Dai and Max Sidebotham

Tare is an AI-powered email marketing solution that makes data-driven automation easy for modern e-commerce brands. Until now, email has been an extremely manual & resource-heavy marketing channel, and it wasn’t possible to automate everything from end-to-end. 66% of current e-commerce email spend goes to labor & agency service costs. Tare helps brands optimize their email marketing with automated customer segmentation, AI-generated content & imagery, and automated scheduling for delivery.

Saavi

Founders: Maya Mikhailov

Saavi revolutionizes enterprise AI deployment, eliminating costly and complex processes. This user-friendly platform enables effortless implementation without the need for developers or data scientists, providing quick deployment in minutes. With its intuitive interface and self-optimizing capabilities, Saavi empowers businesses to unlock the potential of AI, delivering tailored insights for informed decision-making in areas like fraud risk assessment, customer churn prediction, and growth identification. Embrace the future of AI deployment with Saavi and experience ease, speed, and accuracy in transforming your enterprise.

Consumer:

Founders: Ryan Rice

Champ is a disruptive fantasy sports platform that caters specifically to college sports fans. Unlike major platforms that focus on professional leagues, Champ offers an ownership-based fantasy experience for college football and basketball. It empowers fans to buy, sell, and collect digital trading cards featuring their favorite collegiate athletes, using them to construct lineups for fantasy leagues. By addressing the weak ties many fans have to professional leagues and capitalizing on the significant influence of college sports in certain areas, Champ allows users to actively participate in the excitement of college sports and forge deeper connections with their teams.

Founders: Vishnu Hair and Peggy Wang

Ego is an immersive live streaming platform that uses Gen AI to create fully face-tracked 3D avatars. We believe in a future where streaming as a virtual avatar surpasses real-life live streaming, driven by the digital natives of Gen Z who seek pseudonymous online identities. To realize this vision, we developed an app that enables users to generate a 3D avatar, which perfectly mirrors their facial expressions, and live stream on platforms like Twitch and YouTube in 90 seconds. Users can profit from selling virtual goods, customizing avatar appearances, engaging in entertaining mini-games, or orchestrating immersive role-plays.

Afterhour

Founders: Kevin Xu

AfterHour revolutionizes the retail stock trading experience by providing a pseudonymous and voyeuristic social network that focuses on verified trades and stock picking gurus, addressing the lack of trust and entertainment in the online trading community. By connecting brokerage accounts via Plaid and making portfolios public, users gain transparency and can distinguish between legitimate shareholders and random lurkers, while maintaining anonymity. AfterHour’s unique approach combines anonymous yet verified engagement, bridging the gap between authenticity and privacy in a way that no other platform currently does.

Pando

Founders: Charlie Olson and Eric Lax

Pando introduces Income Pooling, a solution that offers risk diversification and fosters a financially-aligned community to address the increasing shift toward a winner-take-all economy. By leveraging machine learning and artificial intelligence, Pando accurately predicts future earnings, enabling groups of high potential individuals to pool their income. With product-market fit in professional baseball and entrepreneurship, Pando has created a new asset class for all professionals in power-law careers by unlocking the value of potential income and reimagining the financial services experience for these individuals.

Juni

Founders: Vivan Shen

Juni revolutionizes personalized tutoring by bringing it online, addressing the scalability challenges and affordability issues of traditional private tutoring. With purpose-built AI models, Juni provides tailored content, questions, and hints to meet individual students’ needs while incorporating context and motivation. The platform establishes a feedback loop with students to customize instructor feedback and enhance the tutoring experience. By leveraging a specialized dataset and the expertise of top instructors, Juni ensures high-quality tutoring for all students, aiming to make educational support accessible and empowering them to thrive.

Climate:

Founders: Nicole Rojas and David Pardavi

Lasso is reshaping on-farm emissions reduction with an automated software platform that streamlines data collection, carbon footprint calculation, and form filling, reducing the time spent from over 60 hours to just one hour per farm. Seamlessly integrating with existing on-farm software systems, Lasso extracts vital information and provides real-time insights through integration with carbon calculator tools. By automating verification, funding forms, and auditing reports, Lasso eliminates manual creation and consolidates farm, project, and emissions data for efficient management and cost savings, making GHG emissions reduction a reality at scale.

Nuvola

Founders: Janet Hur

Nuvola is an advanced battery materials company focused on enhancing battery profitability and eliminating the primary cause of battery fires. With their innovative Safecoat Direct Deposition Separator technology, they reduce reject material costs by 82% and improve yield by 50%, addressing the challenges faced by the Lithium-Ion Battery Industry, which is projected to exceed $200 billion in the next five years. Their solution tackles the error-prone process of folding separator film, which has been responsible for catastrophic fires and billions of dollars in recalls. By providing safer and more efficient batteries, Nuvola supports the growth of electric vehicles, energy storage, and e-aircraft industries.

Healthcare:

Founders: Michelle Xie and Diana Zhu

Stellation is rethinking patient-provider matching with its analytics platform, addressing the lack of accurate information in matching patients with healthcare providers based on their specific health needs. Current practices rely on proximity and availability, neglecting performance data and resulting in limited outcomes and cost savings. Stellation’s SaaS platform bridges this gap by leveraging claims and patient data to profile provider strengths and match individuals with the most suitable healthcare provider. Built on a research-backed methodology, this powerful solution is delivered via API integration for health plans or a user-friendly search interface for patients. By utilizing Stellation, health plans can achieve significant cost savings and improved outcomes for their members.

Enterprise:

Polimorphic

Founders: Parth Shah and Daniel Smith

Polimorphic revolutionizes government operations by automating back-office tasks and transforming how cities track and manage requests, replacing outdated analog methods with efficient digital solutions. With a significant reliance on post-it notes and paper files, government processes face inefficiencies and the impending challenge of a labor shortage. Polimorphic’s focus on the customer service layer of government empowers cities to streamline operations, enhance compliance, and improve the overall citizen experience by offering solutions for constituent requests, payment collection, request tracking, and data management.

Today’s markets are shifting faster than ever before, and Pear is dedicated to evolving alongside them. Each of these 13 company from PearX W23 showcases immense potential for disrupting their respective industries and driving positive change through the power of technology.

At Pear, we are honored to play a pivotal role in the journey of these exceptional startups. We believe in the transformative power of early-stage investments and the tremendous impact they can have on shaping the future. As we continue to identify and nurture the next generation of trailblazers, we remain steadfast in our commitment to fostering innovation, driving growth, and unlocking the extraordinary potential within each entrepreneur we encounter.

We believe in the transformative power of early-stage investments and the tremendous impact they can have on shaping the future.

If you want to partner with any of these companies, please check them out at: https://demoday.pear.vc/. For anyone who missed our Demo Day, we’ll be sharing videos of each team this Thursday, so stay tuned!